We use cookies

This site uses cookies from cmlabs to deliver and enhance the quality of its services and to analyze traffic..

We use cookies

This site uses cookies from cmlabs to deliver and enhance the quality of its services and to analyze traffic..

Last updated: Jan 24, 2023

Disclaimer: Our team is constantly compiling and adding new terms that are known throughout the SEO community and Google terminology. You may be sent through SEO Terms in cmlabs.co from third parties or links. Such external links are not investigated, or checked for accuracy and reliability by us. We do not assume responsibility for the accuracy or reliability of any information offered by third-party websites.

Having a log file on your website is very useful for finding out information that can't be found anywhere else. Not only that, the log file can also be used to view Google's behavior on your website.

Then, what is meant by log file analysis? You will find complete answers about what information is provided and how to access it through this guide.

A log file is a file containing the recorded activity of website access requests that are made and always updated by the server. It should be understood that the log file discussed in this article is different from the term "log file" in web development.

In this article, the log file in question is the access log file, which stores the history of HTTP access requests to the server. While the log file in the web developer records activities that take place on a system and is used to find bugs.

Access log files contain information about which clients make access requests to the website and what pages are accessed. The client here can be of various kinds, be it a user or a web crawler like Googlebot.

The web server creates and updates the access log file on a website, then stores it for a certain period of time. You can use the log file to understand in detail how users or search engines interact with your website.

You may find it a little difficult to find and access the log file for the first time, but you will get very valuable information when you use this file. Therefore, let's take a closer look at this log file analysis guide.

The function of the log file is to provide various information about access request activities that occur on the website. You will not find this information in any tools. The following is some of the information contained in the log file:

As mentioned earlier, log files are stored on the web server. You need access to the server in order to get the files. If you don't have this access, you can ask the IT team or website developer for help to share a copy of the log file.

The problem is that the process of accessing the log file is not easy. There are several issues and challenges that you may face, such as:

The issues described above need to be communicated with the developer. Make sure to explain the urgency of the log file analysis and coordinate with the developer to resolve the issue.

Log files record every access request to the website, including from web crawlers. The data in the log files can tell you the activity and behavior of web crawlers like Googlebot on your website. Of course, you can't get this information from anywhere except the log file.

With log files, you can take advantage of web crawler activity information to maximize the crawling and indexing process. Therefore, log file analysis is very important in SEO.

The following are some of the uses of log files that you can use to maximize website SEO:

You can use log files to analyze how often web crawlers visit your website. Also find out if the crawled pages are important pages and need to be indexed.

If there are a lot of unimportant pages visited by web crawlers, then you can reduce their number by using robots.txt. So, you can streamline your crawl budget.

The log file stores information about the HTTP status code for each access request the server receives. You can find this information in other tools as well. However, log files can tell you how often a URL gets a 404 error code when it is accessed.

By knowing the URLs that get 404 errors the most, you can determine which URLs to prioritize for fixes.

You can monitor historical access request data from web crawlers in log files. By monitoring the historical data, you will find out what the patterns and behavior of web crawlers on the website are. Examples include how many times the web crawler visits a page in a month, which pages are most frequently visited, and so on.

When there is a change in the pattern and behavior of the web crawler, you will already know it and be able to find out what caused it. With this data, business website owners can carry out the feature-enhancing process by creating campaigns and developing product pages more easily.

Log files are files that are very useful in SEO if you can use them well. One thing you can do with it is do a log file analysis.

As a part of a technical SEO strategy, log file analysis will help you to get information about web crawler activity on your website.

By doing a log file analysis, you can find opportunities to optimize the crawling and indexing process so that more of your website pages will be indexed by Google.

Here are some steps for log file analysis that you can follow:

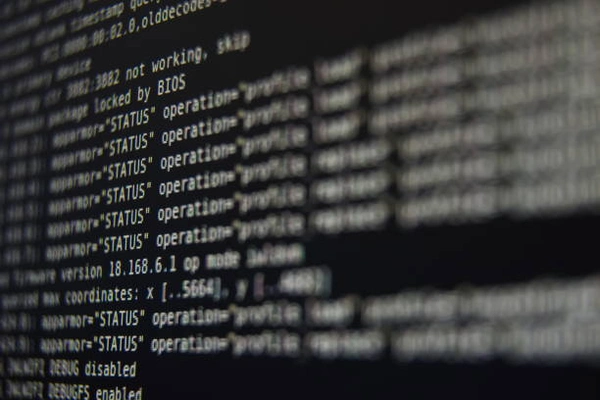

Before performing a log file analysis, make sure that the log file that you are going to use has the correct format. You can take a look at the following sample log file to understand the correct format:

28.301.15.1 – – [28/Oct/2022:11:20:01 -0400] “GET/product/type1/ HTTP/1.1” 200 21466 “https://example.com/product” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)”

Explanation on each component of the log file:

If you want to do a log file analysis, especially related to crawling activities on the website, you need to create a filter to separate data from user agents other than search engine crawlers.

If you use tools to perform log file analysis, you can use the features provided by the tools to filter data.

After confirming the format and filtering out non-web crawlers in the log file, you can start performing log file analysis. Use analysis questions to find behavioral patterns, issues, errors, and optimization opportunities. Here's a list of questions you can use:

Thus, a complete explanation of the role of log files in SEO and how to use them with log file analysis. Hopefully, with this explanation, you can get new knowledge that is useful for your technical SEO optimization process.

If you need help doing a log file analysis, don't hesitate to use a professional SEO service that will optimize your website.

WDYT, you like my article?